Workshop on Computational Approaches to Subjectivity, Sentiment & Social Media Analysis.

Mar 29, 2026

This work goes directly in the context of the XenophoBias🏳️🌈 project.

Aug 25, 2025

Tesista pagado sobre un tema social interesante en un proyecto de investigacion!

Jan 1, 2025

Multimodal Argumentation Mining in Groups Assisted by an Embodied Conversational Agent

Dec 31, 2024

Tackling Biases In or Using Generative AI

Dec 4, 2024

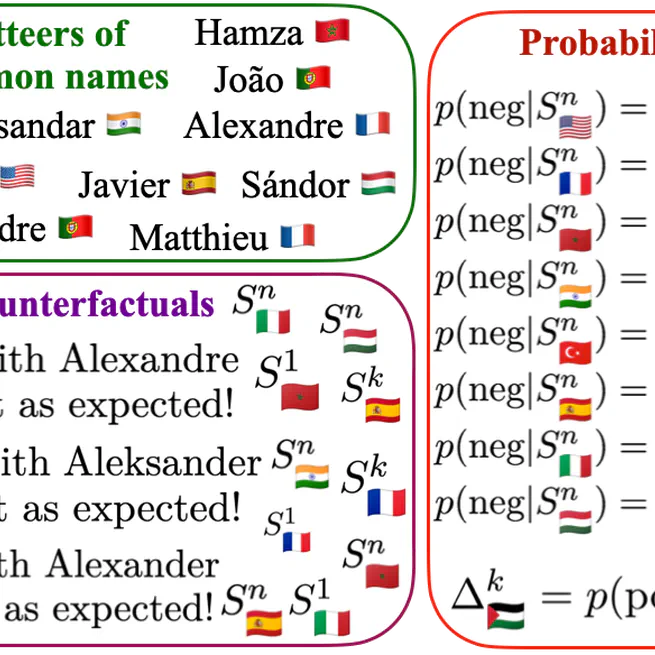

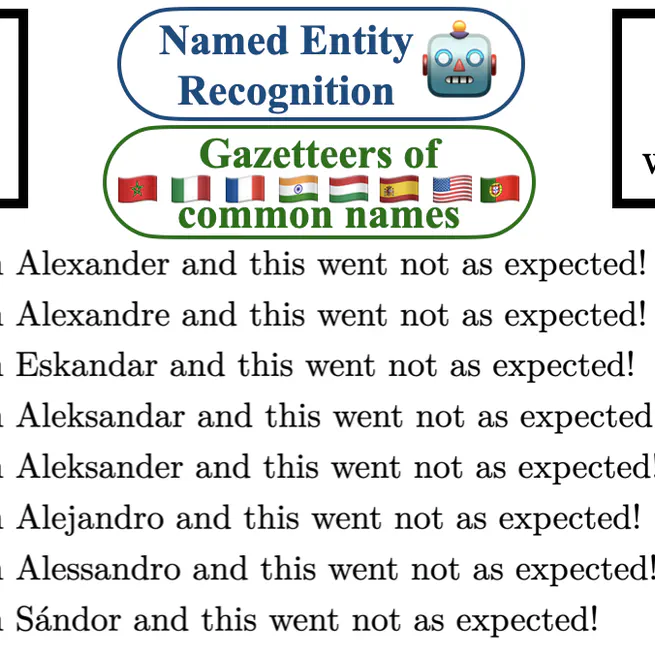

We've developed a method to measure biases in AI models related to named entities from different countries, and our results show that the presence of certain country names can significantly influence predictions, such as hate speech detection and emotion analysis, with changes of up to 23% and 60% respectively! Our findings suggest that these biases are rooted in the pre-training data of language models, and we've uncovered interesting patterns that reveal how the language and country of origin can impact model predictions, with English-speaking country names having a particularly strong effect.

Nov 1, 2024

Multicultural Bias Recognition to Detect and Mitigate Racism, Xenophobia and Geographic Inequalities in Multilingual Large Language Models.

Oct 26, 2024

Workshop on Computational Approaches to Subjectivity, Sentiment & Social Media Analysis.

Aug 15, 2024

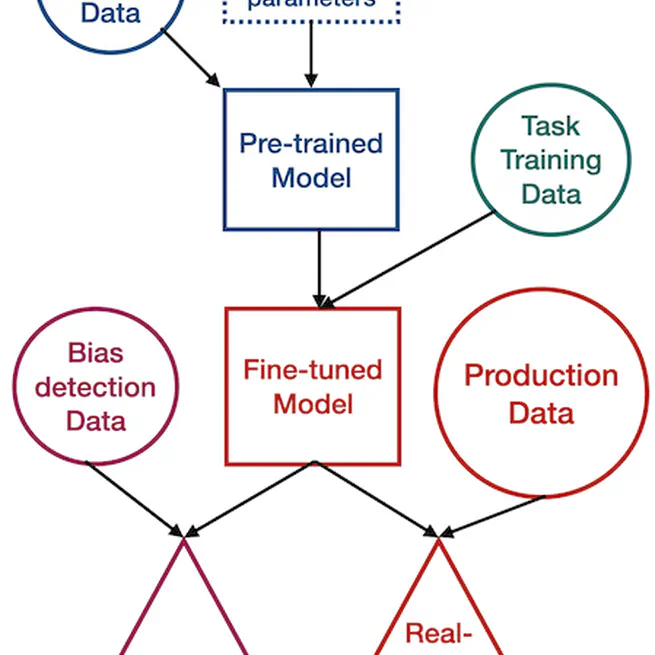

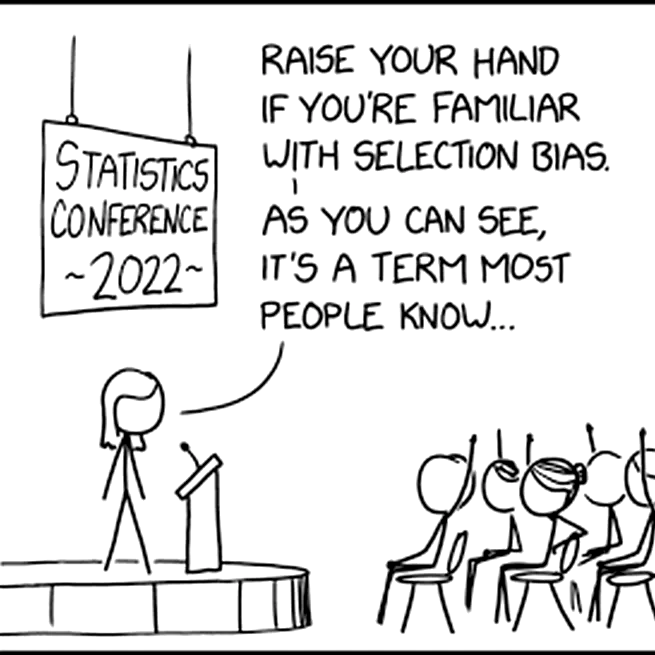

As AI becomes increasingly pervasive in our society, it's crucial that we ensure these systems are fair and unbiased, avoiding the perpetuation of existing inequalities and instead promoting universal access to information and opportunities. In this paper, we break down the concept of bias in machine learning, exploring what it is, why it's a problem, and how to detect and mitigate it using specialized datasets, algorithms, and methods to create more equitable AI systems.

Aug 1, 2024

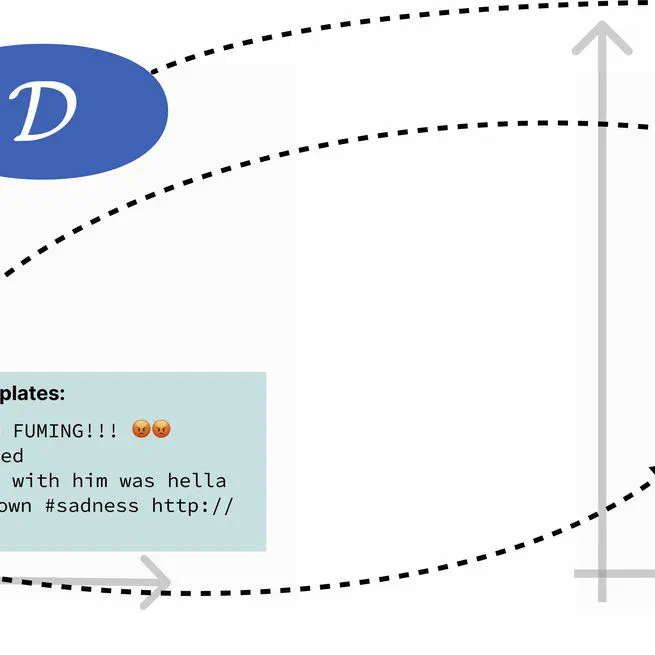

Current bias detection methods in machine learning have their own biases and limitations, so we've developed a new approach that directly tests fine-tuned classifiers on real-world data to identify potential biases. Our method, which involves creating counterfactual examples by modifying named entities in target data, revealed significant biases in multilingual models, including sentiment analysis and stance recognition models, and shed light on the complex interactions between names, languages, and model predictions. Current models tend to prefer names from the countries speaking the language of the sentence, impulsing for the name IA Xenophobia.

May 1, 2024