How does a Pre-Trained Transformer Integrate Contextual Keywords? Application to Humanitarian Computing

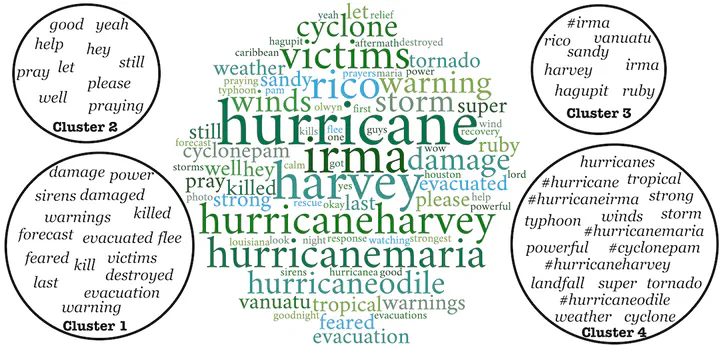

Tokens interacting the most with the event type ’hurricane’ are related to the type of disaster, proper names, and the classes of the task.

Tokens interacting the most with the event type ’hurricane’ are related to the type of disaster, proper names, and the classes of the task.Abstract

In a classification task, dealing with text snippets and metadata usually requires to deal with multimodal approaches. When those metadata are textual, it is tempting to use them intrinsically with a pre-trained transformer, in order to leverage the semantic information encoded inside the model. This paper describes how to improve a humanitarian classification task by adding the crisis event type to each tweet to be classified. Based on additional experiments of the model weights and behavior, it identifies how the proposed neural network approach is partially over-fitting the particularities of the Crisis Benchmark, to better highlight how the model is still undoubtedly learning to use and take advantage of the metadata’s textual semantics.

Type

Publication

In Proceedings of the 18th ISCRAM Conference

It is possible to integrate textual metadata into transformers in order to help the model improve its performances. We show the model uses the semantics of the keyword metadata analyzing the attention interaction between the metadata and the text to classify. We applied this to a humanitarian classification task over tweets, using the disaster event type as context, and finally show this method is also useful to caracterize a new event like a hurricane in a data-driven way.