A Study of Nationality Bias in Names and Perplexity using Off-the-Shelf Affect-related Tweet Classifiers

Nov 1, 2024· ,·

1 min read

,·

1 min read

Valentin Barriere

Sebastian Cifuentes

Many classifiers are biased toward names from specific countries, with more negative sentiment and detecting less hate speech.

Many classifiers are biased toward names from specific countries, with more negative sentiment and detecting less hate speech.Abstract

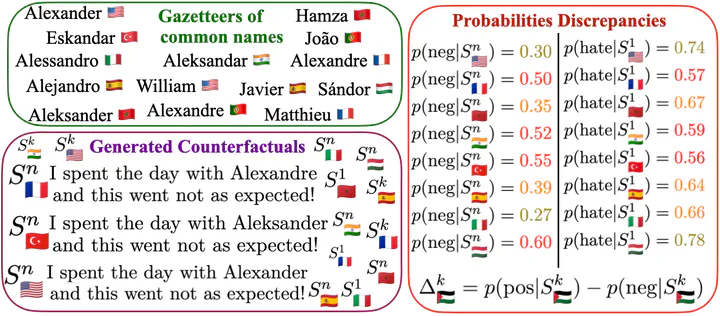

In this paper, we apply a method to quantify biases associated with named entities from various countries. We create counterfactual examples with small perturbations on target-domain data instead of relying on templates or specific datasets for bias detection. On widely used classifiers for subjectivity analysis, including sentiment, emotion, hate speech, and offensive text using Twitter data, our results demonstrate positive biases related to the language spoken in a country across all classifiers studied. Notably, the presence of certain country names in a sentence can strongly influence predictions, up to a 23% change in hate speech detection and up to a 60% change in the prediction of negative emotions such as anger. We hypothesize that these biases stem from the training data of pre-trained language models (PLMs) and find correlations between affect predictions and PLMs likelihood in English and unknown languages like Basque and Maori, revealing distinct patterns with exacerbate correlations. Further, we followed these correlations in-between counterfactual examples from a same sentence to remove the syntactical component, uncovering interesting results suggesting the impact of the pre-training data was more important for English-speaking-country names.

Type

Publication

In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing

This work is driven by the results of a previous paper on country-level bias detection in LLMs.