Fondecyt de Iniciacion🗣️💬🤖

Multimodal Argumentation Mining in Groups Assisted by an Embodied Conversational Agent. An example of Social Agent from FurChat. Credit: The National Robotarium

Multimodal Argumentation Mining in Groups Assisted by an Embodied Conversational Agent. An example of Social Agent from FurChat. Credit: The National RobotariumMultimodal Argumentation Mining in Groups Assisted by an Embodied Conversational Agent

My role

I am the Principal Investigator of this project. This is a 3 years Fondecyt grant of of 90.000.000,00 CLP1 from the Chilean National Research Agency. This is a colaboration with the Université Paris Saclay, the European Commission’s DGIT, Sorbonne Université and Bamberg University.

The project

Interactions and Multimodality are crucial in the development of intelligent AI models that can understand human-like communication. Human learning occurs through interactions with the environment and other humans, which involves the integration of information from multiple modalities such as vision, language but also touch and hearing that enable us to understand the subtle social meanings behind communication.

Therefore, to create intelligent machines that can understand human non-verbal communication, it is essential to train them on multimodal interactions that mimic those of humans to ensure that they can understand and respond appropriately to complex social phenomena.

The recent computational boom has seen the emergence of seminal studies focusing on Multimodal data (Cho, Lu, Schwenk, Hajishirzi, & Kembhavi, 2020; Hasan et al., 2019; Jaegle et al., 2021; J. Li, Li, Xiong, & Hoi, 2022; J. Wang et al., 2022; Zadeh, Chan, Liang, Tong, & Morency, 2019) and Interactions, whether these ones are textual like OpenIA’s InstructGPT or Anthropic’s Claude (Bai et al., 2022; Ouyang et al., 2022; Schulman et al., 2022), or multimodal like Google’s PaLM (Chowdhery et al., n.d.; Chung et al., 2022; Schick, Lomeli, Dwivedi-yu, & Dessì, 2022) or GPT-4 (Bubeck et al., 2023; OpenAI, 2023; Wu et al., 2023).

These advancements show the potential for machines to learn from multimodal interactions and understand human communication, which could revolutionize the way humans socially interact with machines in the future. Nevertheless, nowadays generative agents are restraint to unimodal data or not using the full time-series of every modality of a real human-machine social interaction.

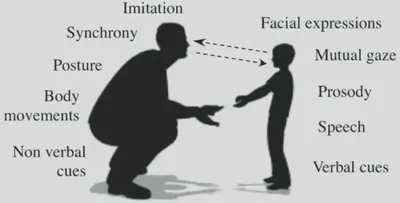

Interaction and multimodality are vital contexts in many social situations. They are also mandatory to make a machine understand the world and get commonsense knowledge, which is essential when tackling human-related complex tasks. Indeed, humans are social animals and they interact with one another. In a general way, the integration of more context is the key to a deep understanding of many phenomena, in order to disambiguate a situation or to reinforce the current estimation: interaction is a crucial context in many social situations. Multimodal interactions allow understanding in a deeper way human behavior. In this particular setting, it is possible to understand a broader part of the multimodal natural language (see Figure 1). Studying the affective and social phenomena like Opinions, Emotions, Empathy, Distress, Stances, Persuasiveness or speaker traits allows to greatly improves the response from the machine (Pelachaud, Busso, & Heylen, 2021; Zhao, Sinha, Black, & Cassell, 2016), but this task is difficult even using multimodal data. My research focuses on designing and developing methods that integrate the multimodal context and how humans influence each other in discussion situations. The research goals of this project fall into this general research area: how to use interactions and multimodality of non-verbal language to enhance social AI systems.

Multimodality:

Communication is not just limited to language, and it is essential to consider other modalities such as vision or audio when building natural language processing (NLP) systems (Baltrušaitis, Ahuja, & Morency, 2017; Liang, Zadeh, & Morency, 2022). Incorporating multiple modalities, or multimodality, is critical in creating more human-like interactions between humans and machines. For instance, while language is the primary means of communication for humans, it is often supplemented by visual and auditory cues such as facial expressions, tone of voice, and gestures. Therefore, it is important building multimodal machine learning systems that can interpret and respond to these cues in a human-like manner.

According to (Fröhlich, Sievers, Townsend, Gruber, & van Schaik, 2019), both human and non-human primate communication is inherently multimodal. As an example, (Mehrabian, 1971) even states that 55% of the emotional content is in the visual signal (facial expressions and body language), 38% in the vocal signal (intonation and sound of the voice) and 7% in the verbal signal (through the meaning of the words and the arrangement of the sentence).

Interactions dynamics:

It is essential to consider the interactive nature of human communication and incorporate it into natural language processing (NLP) systems. By allowing the machine to understand the context and flow of the conversation, it can provide a more natural and seamless interaction with users (Sutskever, Vinyals, & Le, 2014). (Z. Li, Wallace, Shen, & Lin, 2020) suggested that these systems can provide tailored content and services based on the user’s interests and preferences, leading to more engaging and personalized interactions with the user. As humans, we are not learnig by looking at or enviroment, but by interacting with it and with our peers. By considering the interactive nature of human communication and incorporating it into NLP systems, machines can learn to communicate in a way that is more similar to humans, making interactions more engaging and effective.

Proposed research project:

This research project aims at studying the complex phenomena characterizing social interactions between humans using different media, implying different modalities and data domains. My research objective is to design adaptive models that take as a starting point the specificities of the multimodal interaction: the media used to communicate, the interactants’ social relationship, and the communication modalities used to transfer the information. The general goals stand to: understand what the users are trying to achieve as a group, what is the output of this interaction, how a social agent helps reaching it.

In particlar, this project aims to explore the dynamics of how a group of individuals with polarized opinions can reach a consensus. In this work, within groups of individuals debating hot societal topics and issues, the aim will be to automatically detect and retrieve stances and arguments towards the debate question and to ultimately moderate the debate using a human-computer interface that would be specific to such an interaction. To this aim, we think that an Embodied Conversational Agent (Cassell, 2001; Pelachaud, 2005) like the one illustrated in Figure 2, would be the most relevant. Indeed bodily representations structure the way humans perceive the world and the way they perceive other people. Cognitive sciences and social sciences altogether have stressed the importance of embodiment in social interaction, highlighting how interacting with others influences how we behave, perceive and think (Smith & Neff, 2018; Tieri, Morone, Paolucci, & Iosa, 2018), including our social behaviors with embodied intelligent agents such as virtual humans and robots (Holz, Dragone, & O’Hare, 2009).

Another goal is to explore the polarization of society’s attitudes towards hot political topics and study the difference in terms of the difficulty of finding a consensus regarding the type of topics, and the human values involved in classical argumentation (Kiesel, Weimar, Handke, & Weimar, 2022; Mirzakhmedova et al., 2023). In today’s society, the polarization of opinions on political topics is a common phenomenon that can be observed in many different areas. Debates about societal topics and issues can be especially polarizing and lead to a lack of understanding and cooperation between groups with different perspectives (Livingstone, Fernández Rodriguez, & Rothers, 2020). Therefore, it is crucial to understand how individuals with polarized opinions can reach a consensus, and this is the aim of this research project. To achieve it, this project plans to develop an automatic approach to detect and retrieve the stance and arguments of individuals involved in real-time multimodal debates about hot societal topics.

This research aims to delve into the complexities of group dynamics in polarized debates on societal issues. To achieve this, we will not only automatically detect and retrieve stances and their arguments toward the debate question, but also take into account the multimodal aspects of the debate, such as body language, facial expressions and acoustics, which are shown to be important for persuasion in a Vlog (Nojavanasghari, Gopinath, Koushik, Baltrušaitis, & Morency, 2016; S. Park, Shim, Chatterjee, Sagae, & Morency, 2014; Siddiquie, Chisholm, & Divakaran, 2015) or within a debate (Brilman & Scherer, 2015; Mestre et al., 2021). Real-time interaction within the group will be analyzed to understand how individuals respond to each other and how the group as whole moves toward a consensus.

~ 100k dollars ↩︎