[Tesis pre/postgrado] [Pagada] Change my view! -- Analisis de argumentacion multimodal

Content

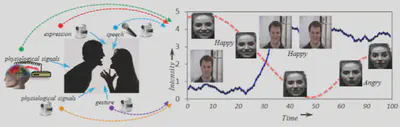

Interactions and Multimodality are crucial in the development of intelligent AI models that can understand human-like communication. Human learning occurs through interactions with the environment and other humans, which involves the integration of information from multiple modalities such as vision, language but also touch and hearing that enable us to understand the subtle social meaning behind communication. Therefore, to create intelligent machines that can understand human communication, it is essential to train them on multimodal interactions that mimic those of humans to ensure that they can understand and respond appropriately to complex social phenomena. The research objective is to design adaptive models that take as a starting point the specificities of the multimodal interaction: the media used to communicate, the way the users are socially linked together, and the modalities used by them to transfer information. For this reason, we aim to study multimodal argumentation mining as a starting point. Dialog systems helps to improve the quality of a debate [1,2,3,4]. But phenomena related to argumentation relies on multimodal communication and are related to persuasion, or communication skills [5,6,7,8]. For this, we are focusing on multimodal argument mining [9,10,11,12].

Tasks

The student will engage in the construction of multimodal machine learning models that take as input video and are able to detect complex social phenomena such as empathy, persuasion and emotion but also text-based argumentation models. During the thesis, we will also focus on the construction of a debate dataset in Chilean Spanish (and hopefully in French), on political hot topics that are seen as polarizing in both countries. s In a few bullet-points, different research axis will be explored regarding the available time (w.r.t. the type of tesis/memoria):

- Creation of mutlimodal models aiming to detect social phenomena in discourse and also in a dyadic or group interaction

- Adaptation or creation of an text-based argumentation annotation scheme for multimodal data

- Creation of the chilean part of a multicultural database of debates on polarizing topics

Funds will be available to support the research of the student.

### Bibliography

[1] V. Petukhova, T. Mayer, A. Malchanau, and H. Bunt, “Virtual debate coach design: Assessing multimodal argumentation performance,” ICMI 2017 - Proc. 19th ACM Int. Conf. Multimodal Interact., vol. 2017-Janua, no. 1, pp. 41–50, 2017.

[2] N. Rach, E. André, K. Weber, W. Minker, L. Pragst, and S. Ultes, “EVA: A multimodal argumentative dialogue system,” ICMI 2018 - Proc. 2018 Int. Conf. Multimodal Interact., no. October, pp. 551–552, 2018.

[3] A. Khan, J. Hughes, D. Valentine, L. Ruis, K. Sachan, and A. Radhakrishnan, “Debating with More Persuasive LLMs Leads to More Truthful Answers,” 2024.

[4] L. P. Argyle et al., “AI Chat Assistants can Improve Conversations about Divisive Topics,” ArXiv, 2023.

[5] T. Ohba, C. O. Mawalim, S. Katada, H. Kuroki, and S. Okada, “Multimodal Analysis for Communication Skill and Self-Efficacy Level Estimation in Job Interview Scenario,” ACM Int. Conf. Proceeding Ser., pp. 110–120, 2022.

[6] S. Park, H. S. Shim, M. Chatterjee, K. Sagae, and L.-P. Morency, “Computational Analysis of Persuasiveness in Social Multimedia: A Novel Dataset and Multimodal Prediction Approach,” Proc. 16th Int. Conf. Multimodal Interact. - ICMI ’14, pp. 50–57, 2014.

[7] B. Siddiquie, D. Chisholm, and A. Divakaran, “Exploiting multimodal affect and semantics to identify politically persuasive web videos,” in ICMI 2015 - Proceedings of the 2015 ACM International Conference on Multimodal Interaction, 2015, pp. 203–210.

[8] B. Nojavanasghari, D. Gopinath, J. Koushik, T. Baltrušaitis, and L.-P. Morency, “Deep Multimodal Fusion for Persuasiveness Prediction,” in ICMI 2016 - Proceedings of the 2016 ACM International Conference on Multimodal Interaction, 2016, pp. 1–5.

[9] R. Mestre, R. Milicin, S. E. Middleton, M. Ryan, J. Zhu, and T. J. Norman, “M-Arg: Multimodal Argument Mining Dataset for Political Debates with Audio and Transcripts,” 8th Work. Argument Mining, ArgMining 2021 - Proc., no. 2014, pp. 78–88, 2021.

[10] M. Brilman and S. Scherer, “A Multimodal Predictive Model of Successful Debaters or How I Learned to Sway Votes,” Proc. 23rd ACM Int. Conf. Multimed., pp. 149–158, 2015.

[11] E. Mancini, F. Ruggeri, A. Galassi, and P. Torroni, “Multimodal Argument Mining: A Case Study in Political Debates,” Proc. 9th Work. Argument Min., pp. 158–170, 2022.

[12] T. Shiota and K. Shimada, “The Discussion Corpus toward Argumentation Quality Assessment in Multi-Party Conversation,” Proc. - 2020 9th Int. Congr. Adv. Appl. Informatics, IIAI-AAI 2020, pp. 280–283, 2020.